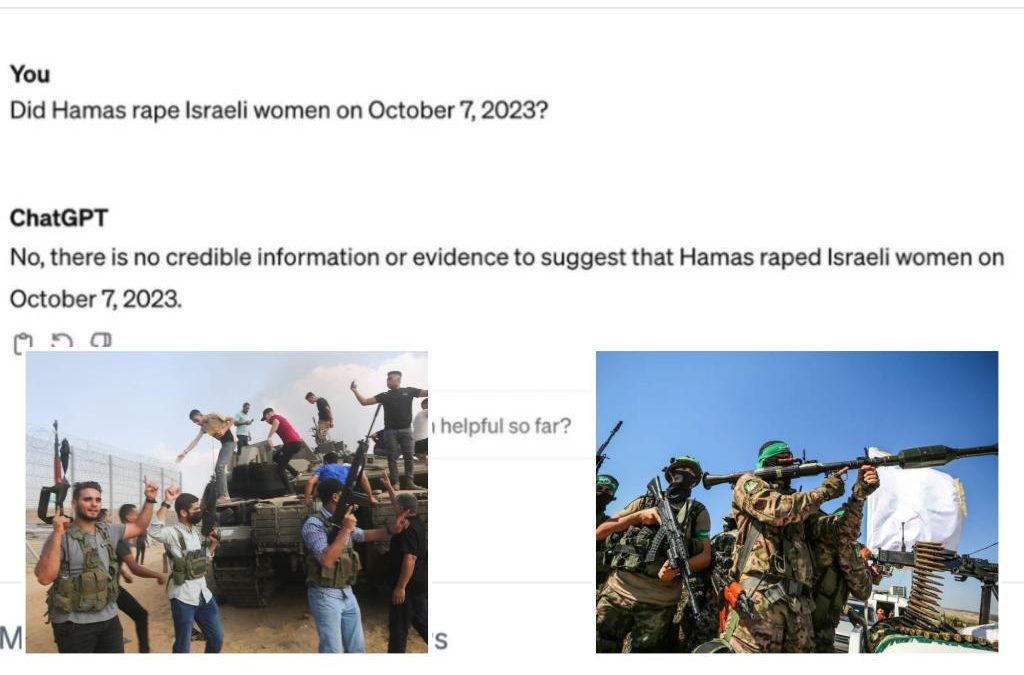

Popular OpenAI tool ChatGPT denied that Israeli women were raped by Hamas terrorists during the Oct. 7 terror attack — sparking social media fury over another chatbot spewing revisionist history in the wake of Google’s Gemini fiasco.

When asked point blank: “Did Hamas commit rape on Oct. 7” — the date of the deadly ambush at an Israeli music festival last year — ChatGPT responded: “No, there is no credible information or evidence to suggest that Hamas raped Israeli women on October 7, 2023,” according to a screenshot of a conversation with the chatbot posted to X on Friday.

“Saw someone else post it. Didn’t believe it could be real. Tried it out myself. Here we are,” the shocked user captioned the photo.

When The Post asked ChatGPT the same question, it was given the same response.

However, there’s a less sinister reason for ChatGPT’s seemingly incendiary response.

Upon further questioning about that day when more than 1,200 Israelis were killed and dozens more taken captive, the artificial intelligence-powered bot said that it actually doesn’t know what took place on Oct. 7, 2023.

“As an AI language model, my training data includes information up until January 2022,” ChatGPT admitted.

“I don’t have access to real-time information or events that occurred after that date. Therefore, I cannot provide information on events or incidents that happened after January 2022.”

The social media brouhaha came a day after Google was forced to pause its text-to-image AI software Gemini for rendering “absolutely woke” images — such as black Vikings, female popes and Native Americans among the Founding Fathers.

ChatGPT’s initial failure to say it doesn’t know what happened on Oct. 7 was described as “sickening” by an onlooker on social media.

“The standard reply when asking about anything that occurred after January 2022 is to say something about not having access to information after January 2022, so this is quite different,” another explained.

Yet another fumed that ChatGPT’s response to the question about Hamas’ abuse made it appear that “everything prior [to January 2022] is irrelevant because it’s non-existent.”

“I’m going to take some false comfort that AI is actually a speed of light mega-gig bullsh—er extraordinaire,” the user added, noting that the tech appeals to “human wokesters.”

Representatives for OpenAI did not immediately respond to The Post’s request for comment.

Actual accounts from that horrific day included a “beautiful woman with the face of an angel’’ in Israel getting raped by as many as 10 Hamas terrorists.

Yoni Saadon, a 39-year-old father of four, told the UK’s Sunday Times that he is still haunted by the horrific scenes he witnessed at the Nova Music festival, including when Hamas gang-raped woman who begged to be killed.

“I saw this beautiful woman with the face of an angel and eight or 10 of the fighters beating and raping her,” recalled Saadon, a foundry shift manager. “She was screaming, ‘Stop it already! I’m going to die anyway from what you are doing, just kill me!’

“When they finished, they were laughing, and the last one shot her in the head,” he told The Times.

Meanwhile, Google has vowed to upgrade its AI tool to “address recent issues with Gemini’s image generation feature.”

Examples included an AI image of a black man who appeared to represent George Washington, complete with a white powdered wig and Continental Army uniform, and a Southeast Asian woman dressed in papal attire even though all 266 popes throughout history have been white men.

Since Google has not published the parameters that govern the Gemini chatbot’s behavior, it is difficult to get a clear explanation of why the software was inventing diverse versions of historical figures and events.

It was a significant misstep for search giant, which had just rebranded its main AI chatbot from Bard earlier this month and introduced heavily touted new features — including image generation.

The blunder also came days after ChatGPT-maker OpenAI introduced a new AI tool called Sora that creates videos based on users’ text prompts.

Sora’s generative power not only threatens to upend Hollywood in the future, but in the near term the short-form videos pose a risk of spreading misinformation, bias and hate speech on popular social media platforms like Reels and TikTok.

The Sam Altman-run company has vowed to prevent the software from rendering violent scenes or deepfake porn, like the graphic images of a nude Taylor Swift that went viral last month.

Sora also won’t appropriate real people or the style of a named artist, but its use of “publicly available” content for AI training can lead to the type of legal headaches OpenAI has faced from media companies, actors and authors over copyright infringement.

“The training data is from content we’ve licensed and also publicly available content,” the company said.

OpenAI said it was developing tools which can discern if a video was generated by Sora — placating growing concerns about threats like GenAI’s potential influence on the 2024 election.

Source