On a December afternoon in 2020, Wall Street Journal reporter Jeff Horwitz drove to Redwood Regional Park, just east of Oakland, Calif., for a mysterious meeting.

For months, he’d been working on a story about Facebook, albeit with little luck finding anyone from the company willing to talk. But then he reached out to Frances Haugen.

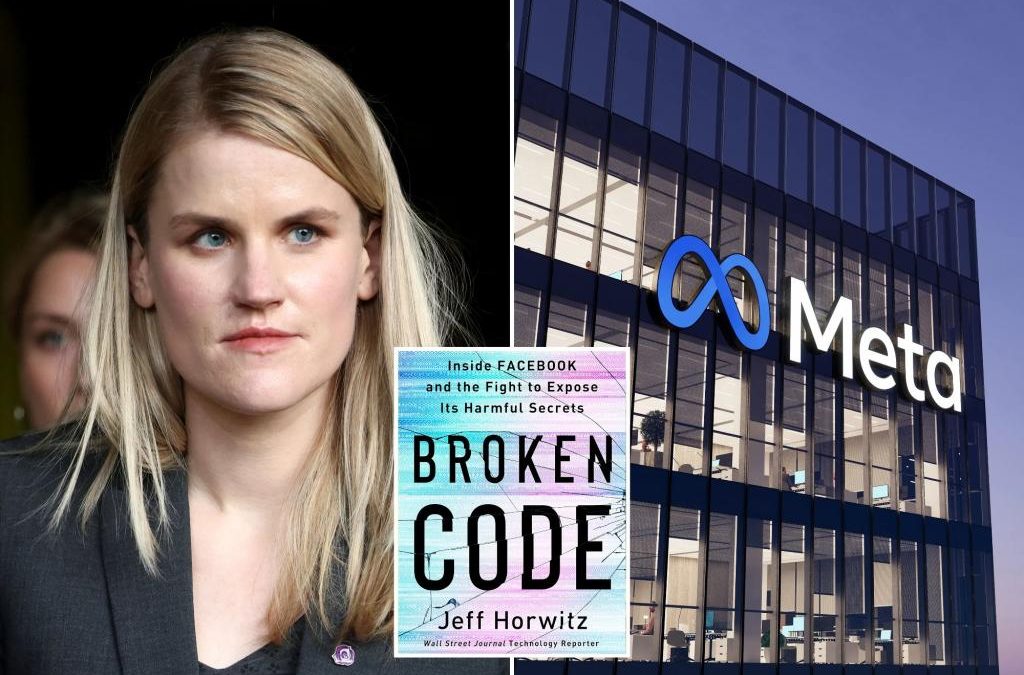

On paper, she didn’t seem like the most obvious candidate for a whistleblower. A mid-level product manager on Facebook’s Civic Integrity team, Haugen, 35, had only been with the social network for a little less than a year and a half. But she was eager.

“People needed to understand what was going on at Facebook, she said, and she had been taking some notes that she thought might be useful in explaining it,” Horwitz writes in his new book, “Broken Code: Inside Facebook and the Fight to Expose Its Harmful Secrets” (Doubleday).

Haugen didn’t want to talk by email or even phone, insisting it was too dangerous.

Instead, she suggested meeting at a hiking trail for privacy — even even sent Horwitz the address via an encrypted messaging app.

They strolled for almost a mile through California’s coastal redwoods, looking for a spot isolated from hikers and joggers.

Haugen’s paranoid maneuvers were worth the wait.

She’d found evidence that “Facebook’s platforms eroded faith in public health, favored authoritarian demagoguery, and treated users as an exploitable resource,” the book says. But she wanted to do more than just smuggle out a few stray documents.

She was “contemplating spending months inside the company for the specific purpose of investigating Facebook,” Horwitz writes.

Neither of them could have anticipated how far that rabbit hole would take them.

Over the coming months, Haugen would bring Horwitz “tens of thousands of pages of confidential documents, showing the depth and breadth of the harm being done to everyone from teenage girls to the victims of Mexican cartels,” writes Horwitz. “The uproar would plunge Facebook into months of crisis, with Congress, European regulators, and average users questioning Facebook’s role.”

In the beginning, Horwitz was intrigued but cautious. Haugen’s earnest belief that “if she didn’t expose what was known inside Facebook, millions of people would likely die” struck him as rather grandiose and melodramatic.

Still, he had nothing but faith in Haugen’s intentions. She wasn’t just a disgruntled employee, but someone who truly believed that exposing Facebook was the only way to save it.

An Iowa native with college professor parents, Haugen had studied electrical and computer engineering at Olin College before heading west to Silicon Valley in the mid-aughts, full of optimism about what social media could accomplish.

She “reflexively accepted the idea that social networks were good for the world, or at worst neutral and entertaining,” writes Horwitz.

Haugen was hired by Facebook in 2019 for a noble mission: to study how misinformation was spread on the site and what could be done to stop it. Her team was given just three months to come up with an actionable game plan, a schedule that Haugen knew was implausible.

When they failed to deliver easy solutions on a short timeline, they were reviewed poorly by upper management.

But Haugen was undeterred — and she had questions, like why were so many employees at Facebook

struggling? They were understaffed, under absurd deadlines, and more often than not ignored when they came up with real, if difficult, ideas on how to change the platform for the better.

“I was surrounded by smart, conscientious people who every day discovered ways to make Facebook safer,” Haugen told the author. “Unfortunately, safety and growth routinely traded off — and Facebook was unwilling to sacrifice even a fraction of percent of growth.”

Samidh Chakrabarti, a one-time leader of Facebook’s civic-integrity team, assured Haugen that most Facebook workers “accomplish what needs to be done with far less resources than anyone would think possible,” a revelation meant to be inspiring but that struck Haugen as disturbing.

It was a corporate philosophy shared by many authority figures at the company. “Building things is way more fun than making things secure and safe,” Brian Boland, a longtime vice president in Facebook’s Advertising and Partnerships divisions, told the author about Facebook’s unofficial world-view. “Until

there’s a regulatory or press fire, you don’t deal with it.”

When Facebook engineers were asked in 2021 about their greatest frustration with the company, they shared similar concerns. “We perpetually need something to fail — often f–king spectacularly — to drive interest in fixing it,” read one response. “Because we reward heroes more than we reward the people who prevent a need for heroism.”

Haugen and Horwitz began meeting regularly, moving to the author’s backyard in Oakland. He bought her a cheap burner phone to take screenshots of files on her work laptop. One of those files was an internal analysis about Facebook’s response to the January 6 riot, illustrated with a cartoon of a dog in a firefighter

hat in front of a burning Capitol building.

Haugen and her team were tasked with identifying how Facebook could stop or at least discourage users from using the platform to fan the flames of conspiracy theories like Donald Trump’s “Stop the Steal” election denial in the future.

They created what became an “information corridor,” in which connections were traced between ringleaders, amplifiers and “susceptible users,” those most likely to be swayed by radicalism. There were 12 teams within Facebook, working not just on how to bring down user groups spreading misinformation but “inoculate potential followers against them,” Horwitz writes.

This didn’t sit well with Haugen, a progressive Democrat with libertarian leanings.

Facebook had “moved from targeting dangerous actors to targeting dangerous ideas, building systems that could quietly smother a movement in its infancy,” Horwitz writes. “She had images of George Orwell’s thought police.”

Though Haugen’s main focus at Facebook was how misinformation was spread, she stumbled upon other damning evidence on a whim. Curious about how teenage mental health was affected by Instagram — the platform bought by Facebook in 2012 — she found a 2019 presentation by user-experience researchers that came to this harrowing conclusion: “We make body image issues worse for one in three teen girls.”

There was also documentation that Facebook was aware its site was used for human trafficking but did nothing to stop it.

“The broader picture that emerged was not that vile things were happening on Facebook—it was that Facebook knew,” Horwitz writes. “It knew the extent of the problems on its platform, it knew (and usually ignored) the ways it might address them, and, above all, it knew how the dynamics of its social network differed from those of either the open internet or offline life.”

On Haugen’s final day as a Facebook employee, May 17th 2021, she decided to go out with a bang.

She’d already collected 22,000 screenshots of 1,200 documents over the span of six months. But as Horwitz waited outside of her Puerto Rico apartment, where she’d moved from California, with a taxi ready to flee, Haugen downloaded the company’s entire organizational chart, “a horde of information that surely amounted to the largest leak in the company’s history,” Horwitz writes.

In September 2021, the Facebook Files, which Horwitz spearheaded along with other journalists, were finally published by the Wall Street Journal. Haugen gave a damning interview for “60 Minutes,” and days later testified on Capitol Hill.

“Facebook wants you to believe that the problems we’re talking about are unsolvable,” Haugen told the committee, before explaining exactly how the biggest social media juggernaut on the planet could fix all of those problems.

It’s what set Haugen apart from other whistleblowers. She wasn’t simply trying to point out how her former employer was wrong, burning the bridge as she unloaded secrets. Her optimism about social media may’ve been deflated because of her experiences at Facebook, but she also believed the baby didn’t need to be thrown out with the bath water.

It’s arguable whether anything on Facebook has changed since her testimony. But Haugen continues to fight for fixing the problems of social media, not just exposing them. She launched a nonprofit last year, Beyond the Screen, devoted to documenting how Big Tech companies are falling short of their “ethical obligations to society” and coming up with plans to help them course-correct.

Haugen hasn’t had any interaction with Facebook since that final day, but she did leave a parting message on her way out the door. Just before closing her laptop, after downloading files that would prove the company valued the undivided attention of their users over their souls, she typed a parting message that she knew Facebook’s Security team would stumble upon in their inevitable forensic review.

“I don’t hate Facebook,” she wrote. “I love Facebook. I want to save it.”

Source