Despite the wild success of ChatGPT and other large language models, the artificial neural networks (ANNs) that underpin these systems might be on the wrong track.

For one, ANNs are “super power-hungry,” said Cornelia Fermüller, a computer scientist at the University of Maryland. “And the other issue is [their] lack of transparency.” Such systems are so complicated that no one truly understands what they’re doing, or why they work so well. This, in turn, makes it almost impossible to get them to reason by analogy, which is what humans do—using symbols for objects, ideas, and the relationships between them.

Such shortcomings likely stem from the current structure of ANNs and their building blocks: individual artificial neurons. Each neuron receives inputs, performs computations, and produces outputs. Modern ANNs are elaborate networks of these computational units, trained to do specific tasks.

Yet the limitations of ANNs have long been obvious. Consider, for example, an ANN that tells circles and squares apart. One way to do it is to have two neurons in its output layer, one that indicates a circle and one that indicates a square. If you want your ANN to also discern the shape’s color—say, blue or red—you’ll need four output neurons: one each for blue circle, blue square, red circle, and red square. More features mean even more neurons.

This can’t be how our brains perceive the natural world, with all its variations. “You have to propose that, well, you have a neuron for all combinations,” said Bruno Olshausen, a neuroscientist at the University of California, Berkeley. “So, you’d have in your brain, [say,] a purple Volkswagen detector.”

Instead, Olshausen and others argue that information in the brain is represented by the activity of numerous neurons. So the perception of a purple Volkswagen is not encoded as a single neuron’s actions, but as those of thousands of neurons. The same set of neurons, firing differently, could represent an entirely different concept (a pink Cadillac, perhaps).

This is the starting point for a radically different approach to computation, known as hyperdimensional computing. The key is that each piece of information, such as the notion of a car or its make, model, or color, or all of it together, is represented as a single entity: a hyperdimensional vector.

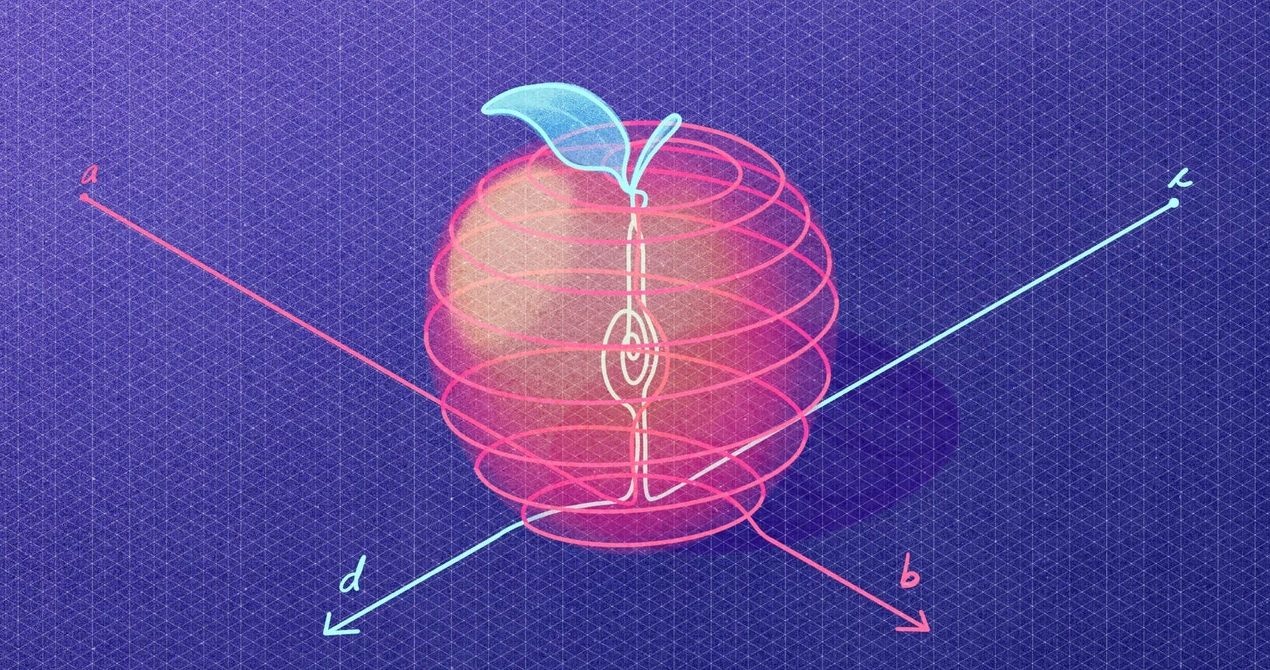

A vector is simply an ordered array of numbers. A 3D vector, for example, comprises three numbers: the x, y, and z coordinates of a point in 3D space. A hyperdimensional vector, or hypervector, could be an array of 10,000 numbers, say, representing a point in 10,000-dimensional space. These mathematical objects and the algebra to manipulate them are flexible and powerful enough to take modern computing beyond some of its current limitations and to foster a new approach to artificial intelligence.

“This is the thing that I’ve been most excited about, practically in my entire career,” Olshausen said. To him and many others, hyperdimensional computing promises a new world in which computing is efficient and robust and machine-made decisions are entirely transparent.

Enter High-Dimensional Spaces

To understand how hypervectors make computing possible, let’s return to images with red circles and blue squares. First, we need vectors to represent the variables SHAPE and COLOR. Then we also need vectors for the values that can be assigned to the variables: CIRCLE, SQUARE, BLUE, and RED.

The vectors must be distinct. This distinctness can be quantified by a property called orthogonality, which means to be at right angles. In 3D space, there are three vectors that are orthogonal to each other: one in the x direction, another in the y, and a third in the z. In 10,000-dimensional space, there are 10,000 such mutually orthogonal vectors.

Source