Sam Altman, the CEO of OpenAI, recently said that China should play a key role in shaping the guardrails that are placed around the technology.

“China has some of the best AI talent in the world,” Altman said during a talk at the Beijing Academy of Artificial Intelligence (BAAI) last week. “Solving alignment for advanced AI systems requires some of the best minds from around the world—and so I really hope that Chinese AI researchers will make great contributions here.”

Altman is in a good position to opine on these issues. His company is behind ChatGPT, the chatbot that’s shown the world how rapidly AI capabilities are progressing. Such advances have led scientists and technologists to call for limits on the technology. In March, many experts signed an open letter calling for a six-month pause on the development of AI algorithms more powerful than those behind ChatGPT. Last month, executives including Altman and Demis Hassabis, CEO of Google DeepMind, signed a statement warning that AI might someday pose an existential risk comparable to nuclear war or pandemics.

Such statements, often signed by executives working on the very technology they are warning could kill us, can feel hollow. For some, they also miss the point. Many AI experts say it is more important to focus on the harms AI can already cause by amplifying societal biases and facilitating the spread of misinformation.

BAAI chair Zhang Hongjiang told me that AI researchers in China are also deeply concerned about new capabilities emerging in AI. “I really think that [Altman] is doing humankind a service by making this tour, by talking to various governments and institutions,” he said.

Zhang said that a number of Chinese scientists, including the director of the BAAI, had signed the letter calling for a pause in the development of more powerful AI systems, but he pointed out that the BAAI has long been focused on more immediate AI risks. New developments in AI mean we will “definitely have more efforts working on AI alignment,” Zhang said. But he added that the issue is tricky because “smarter models can actually make things safer.”

Altman was not the only Western AI expert to attend the BAAI conference.

Also present was Geoffrey Hinton, one of the pioneers of deep learning, a technology that underpins all modern AI, who left Google last month in order to warn people about the risks increasingly advanced algorithms might soon pose.

Max Tegmark, a professor at Massachusetts Institute of Technology (MIT) and director of the Future of Life Institute, which organized the letter calling for the pause in AI development, also spoke about AI risks, while Yann LeCun, another deep learning pioneer, suggested that the current alarm around AI risks may be a tad overblown.

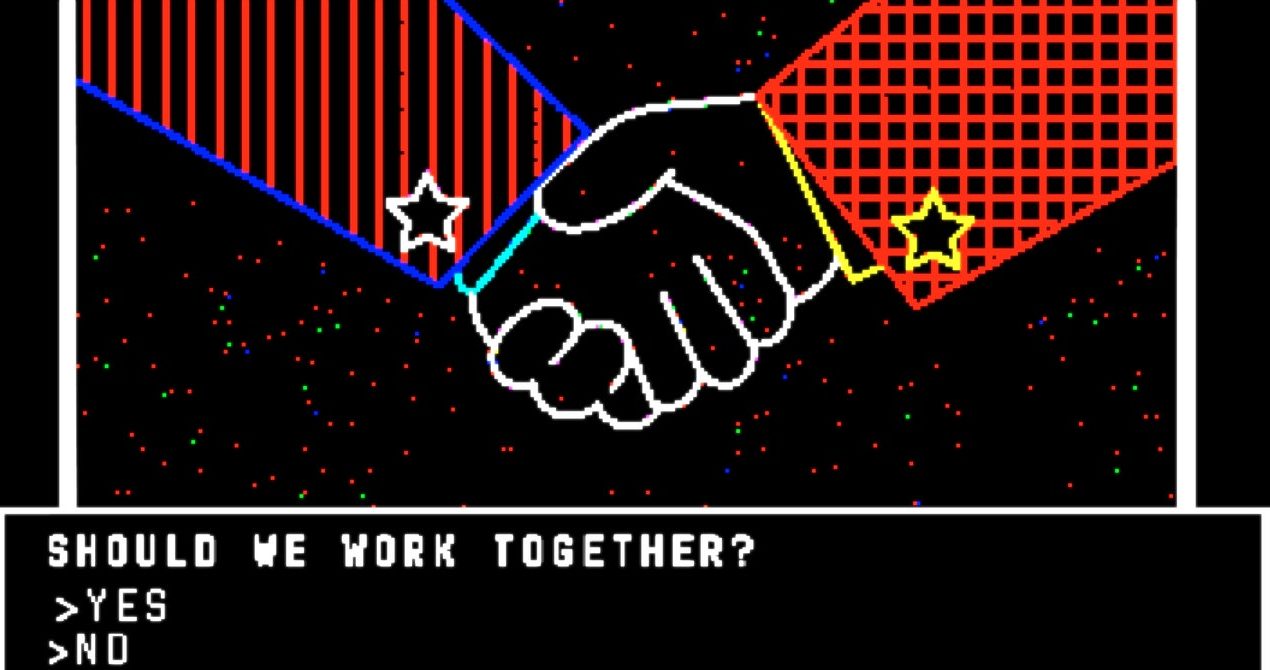

Wherever you stand on the doomsday debate, there’s something nice about the US and China sharing views on AI. The usual rhetoric revolves around the nations’ battle to dominate development of the technology, and it can seem as if AI has become hopelessly wrapped up in politics. In January, for instance, Christopher Wray, the head of the FBI, told the World Economic Forum in Davos that he is “deeply concerned” by the Chinese government’s AI program.

Given that AI will be crucial to economic growth and strategic advantage, international competition is unsurprising. But no one benefits from developing the technology unsafely, and AI’s rising power will require some level of cooperation between the US, China, and other global powers.

But as with the development of other “world-changing” technologies, like nuclear power and the tools needed to combat climate change, finding some common ground may fall to the scientists who understand the technology best.

Source