A top Google executive responsible for the “absurdly woke” AI chatbot Gemini has come under fire after allegedly declaring in tweets that “white privilege is f—king real” and America is rife with “egregious racism.”

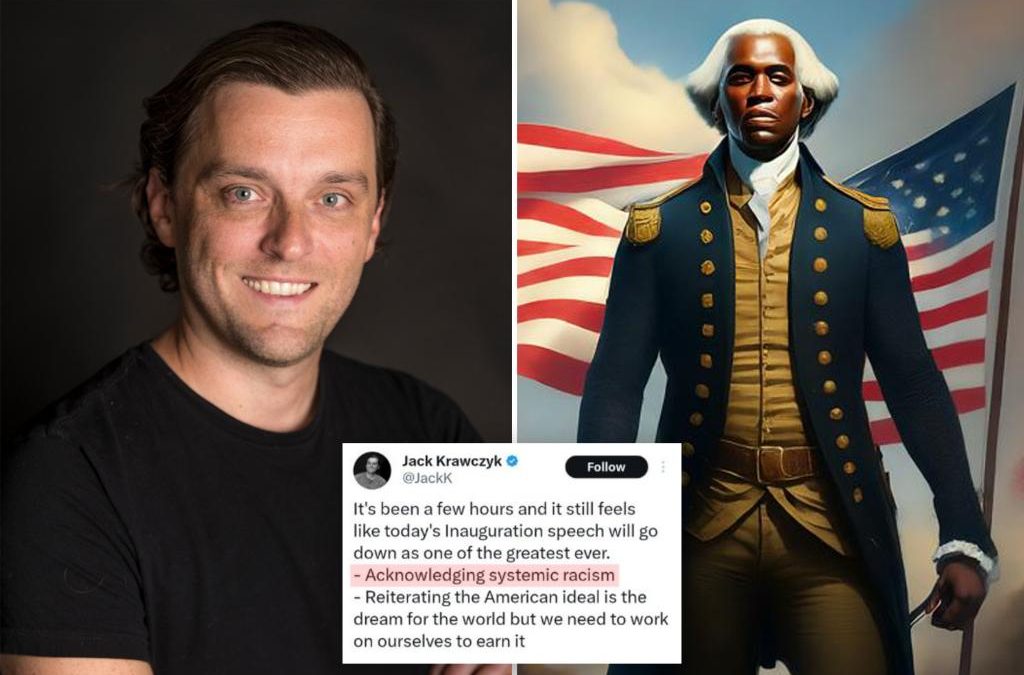

The politically charged tweets allegedly made by Jack Krawczyk, the senior director of product for Gemini Experiences, resurfaced on X on Thursday — a day after The Post reported on Gemini’s strange habit of generating “diverse” images that were historically or factually inaccurate when users asked for pictures of Vikings or America’s Founding Fathers.

By Thursday afternoon, Krawczyk had set his X account to private and scrubbed any mention of Google or his role at the company from his account bio.

Krawczyk, 40, has set his X feed to a private setting, but screenshots of his purported tweets, most of them made before he was hired by Google in 2020, revealed a distinctly ultra-progressive bias.

“White privilege is f—king real,” Krawczyk allegedly wrote in one tweet dated April 13, 2018, according to screenshots of the post circulating on X. “Don’t be an a—hole and act guilty about it – do your part in recognizing bias at all levels of egregious.”

On Jan. 20, 2021, Krawczyk allegedly referred to President Biden’s inaugural address as “one of the greatest ever” for “acknowledging systemic racism” and “reiterating the American ideal is the dream for the world but we need to work on ourselves to earn it.”

In another post from Oct. 21, 2020 – apparently after he voted against Donald Trump in the presidential election – Krawczyk allegedly wrote: “I’ve been crying in intermittent bursts for the past 24 hours since casting my ballot. Filling in that Biden/Harris line felt cathartic.”

The Polish-born tech savant also allegedly described America as a place “where racism is the #1 value our populace seeks to uphold above all” and declared that “we obviously have egregious racism in this country” in other screenshots that made the rounds on social media.

Efforts to reach Krawczyk directly were not immediately successful.

Google temporarily disabled Gemini’s image generation tool Thursday after the kerfuffle, with Krawczyk admitting in a statement that it was “missing the mark” by producing revisionist images.

Google declined to comment on the tweets and referred to the company’s earlier statement on its decision to “pause” Gemini’s image generation tool.

Critics were quick to suggest that Krawczyk’s alleged personal bias had contributed to Gemini’s penchant for prioritizing “diverse” outputs over accuracy.

“The head of Google’s Gemini AI everyone. And you wonder why it discriminates against white people,” said @LeftismForU, an account that compiled many of the screenshots.

“Woke, race obsessed idiot is in charge of product at Gemini,” declared Ian Miles Cheong, an influencer who frequently interacts with Elon Musk on X.

Musk personally weighed in on the tweets, describing the Google employee as an “a—hole” and a “racist douchenozzle.”

Musk also shared The Post’s cover story on Gemini’s bizarre images, writing: “The woke mind virus is killing Western Civilization. Google does the same thing with their search results. Facebook & Instagram too. And Wikipedia.”

Krawczyk was described as Google AI’s “teacher” in a Men’s Health profile from last December.

The Post couldn’t immediately verify the authenticity of every screenshot, but the alleged tweets have certainly gone viral.

A search for the phrase “Jack Krawczyk white privilege” produced his now-viral thread as the first result.

When the bizarre behavior of Gemini’s image generation tool surfaced on Wednesday, Krawczyk was the first Google employee to weigh in on the matter – declaring in a statement that his team was “working to improve these kinds of depictions immediately.”

“Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here,” Krawczyk told The Post.

Since Google does not publish the model that governs the Gemini chatbot’s behavior, it is difficult to pin down the exact cause that led the software to invent diverse versions of historical figures and events.

Google’s “training process” with human input is one plausible explanation for the behavior, according to Fabio Motoki, a lecturer at the UK’s University of East Anglia who co-authored a paper last year that found a noticeable left-leaning bias in ChatGPT.

“Remember that reinforcement learning from human feedback (RLHF) is about people telling the model what is better and what is worse, in practice shaping its ‘reward’ function – technically, its loss function,” Motoki told The Post.

“So, depending on which people Google is recruiting, or which instructions Google is giving them, it could lead to this problem.”

Krawczyk has been a Google employee for four years. He previously worked on the Google Assistant program, according to his LinkedIn account.

Before coming to Google, he held roles at WeWork, where he served as vice president of product management, as well as VSCO and United Masters.

Source